Azure Data Factory supports a Notebook activity that can be used to automate the unattended execution of a notebook in an Azure Databricks workspace.

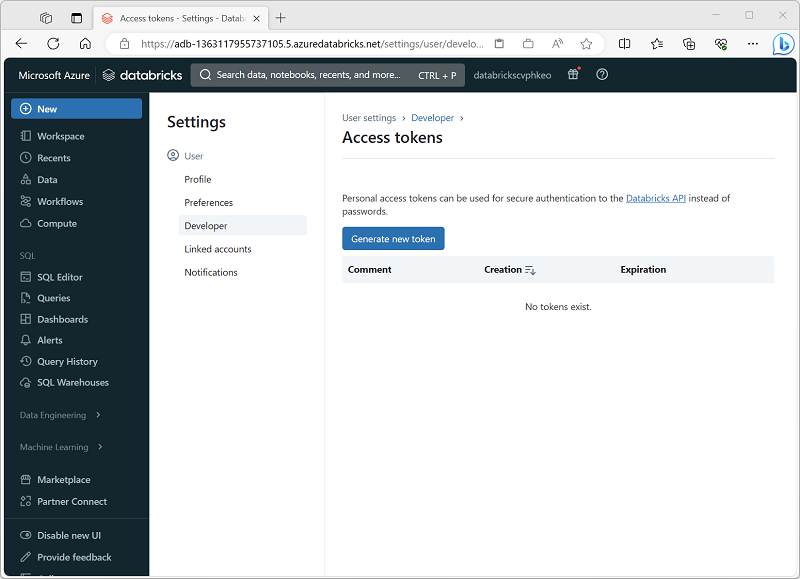

To run notebooks in an Azure Databricks workspace, the Azure Data Factory pipeline must be able to connect to the workspace; which requires authentication. To enable this authenticated connection, you must perform two configuration tasks:

- Generate an access token for your Azure Databricks workspace.

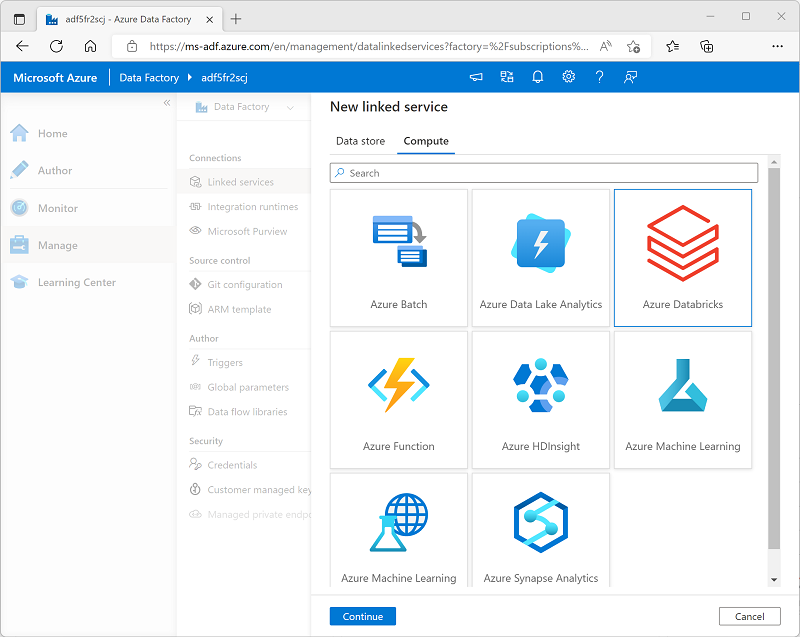

- Create a linked service in your Azure Data Factory resource that uses the access token to connect to Azure Databricks.

You can generate access tokens for applications, specifying an expiration period after which the token must be regenerated and updated in the client applications.

To create an Access token, use the Generate new token option on the Access tokens tab of the User Settings page in Azure Databricks portal.

Creating a linked service

To connect to Azure Databricks from Azure Data Factory, you need to create a linked service for Azure Databricks compute. You can create a linked service in the Linked services page in the Manage section of Azure Data Factory Studio.

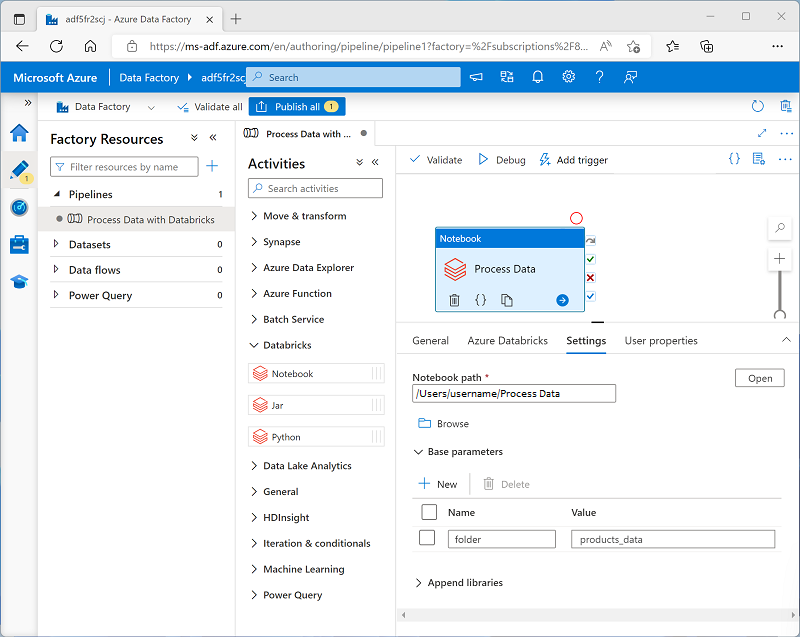

You can use parameters to pass variable values to a notebook from the pipeline. Parameterization enables greater flexibility than using hard-coded values in the notebook code.

To define and use parameters in a notebook, use the dbutils.widgets library in your notebook code. the following Python code defines a variable named folder and assigns a default value of data:

dbutils.widgets.text("folder", "data")

To retrieve a parameter value, use the get function, like this:

folder = dbutils.widgets.get("folder")

In addition to using parameters that can be passed in to a notebook, you can pass values out to the calling application by using the notebook.exit function, as shown here:

path = "dbfs:/{0}/products.csv".format(folder)

dbutils.notebook.exit(path)

To pass parameter values to a Notebook activity, add each parameter to the activity's Base parameters, as shown here

In this example, the parameter value is explicitly specified as a property of the Notebook activity. You could also define a pipeline parameter and assign its value dynamically to the Notebook activity's base parameter; adding a further level of abstraction.